Alexa Slot Value Synonyms

- Today, we'll focus on Entity Resolution, which enables you to add synonyms to your slot values and validate that a user actually said one of them. The Alexa service will then handle resolving the synonyms to your slot values. This simplifies your code since you don't have to write any code to match the synonym that user said to your slot value.

- I have an intent and a custom slot value for this intent, the slot value is called 'event' which is a value from my 'LISTOFEVENTS' list. In this list, I have many options for slots, but more importantly, I have many synonyms for each slot value. To treat them all the same, I would like to use the ID I have assigned to each slot.

- Alexa Slot Value Synonyms Dictionary

- Alexa Slot Value Synonyms Thesaurus

- Alexa Slot Value Synonyms Value

Alexa will fill in the gap and treat whatever falls into that slot as a parameter. That same parameter name will be used in the JavaScript code. Again, it is very important to come up with every possible phrase. ALEXA: The Great Dane is an example of a big dog. In this case, your skill would accept the word big as a slot value. Other values may include: small and medium. Your skill captures the word “big” as the slot value and passes it on to your AWS Lambda function, so your skill can respond with “The Great Dane is an example of a big dog.”.

In this Alexa Skill tutorial for beginners, you will learn how to build a project for the popular voice platform from scratch. We will cover the essentials of building an app for Alexa, how to set everything up on the Amazon Developer Portal, and how to use Jovo to build your Skill's logic.

See also: Build a Google Action in Node.js with Jovo

What We're Building

To get you started as quickly as possible, we're going to create a simple Skill that responds with 'Hello World!'

Please note: This is a tutorial for beginners and explains the essential steps of Alexa Skill development in detail. If you already have experience with Alexa and just want to learn more about how to use Jovo, either skip the first few sections and go right to Code the Skill, or take a look at the Jovo Documentation.

How Alexa Skills Work

In this section, you will learn more about the architecture of Alexa and how users interact with its Skills. An Alexa Skill interaction basically consists of speech input (your user's request) and output (your Skill's response).

There are a few steps that happen before a user's speech input is reaching your Skill. The voice input process (from left to right) consists of three stages that happen at three different places:

- A user talking to an Alexa enabled device (speech input), which is passed to...

- the Alexa API which understands what the user wants (through natural language understanding), and creates a request, which is passed to...

- your Skill code which knows what to do with the request.

The third stage is where your magic is happening. The voice output process (from right to left) goes back and passes the stages again:

- Your Skill code now turns the input into a desired output and returns a response to...

- the Alexa API, which turns this response into speech via text-to-speech, sending sound output to...

- the Alexa enabled device, where your user is happily waiting and listening

In order to make the Skill work, we first need to configure it, so that the Alexa API knows which data to pass to your application (and where to pass it). We will do this on the Amazon Developer Portal.

Create a Skill on the Amazon Developer Portal

The Amazon Developer Portal is the console where you can add your Skill as a project, configure the language model, test if it's working, and publish it to the Alexa Skill Store.

Please note: With the new Jovo CLI, you don't have to go through all the steps in the Developer Portal, as you can create a new Skill project and Interaction Model with the jovo deploy command. However, we believe for starters it's good training to understand how Alexa Skills work. Let's get started:

Log in with your Amazon Developer Account

Go to developer.amazon.com and click 'Developer Console' on the upper right:

Now either sign in with your Amazon Developer account or create a new one. To simplify things, make sure to use the same account that's registered with your Alexa enabled device (if possible) for more seamless testing.

Great! You should now have access to your account. This is what your dashboard of the Amazon Developer Console looks like:

Create a new Skill

Now it's time to create a new project on the developer console. Click on the 'Alexa' menu item in the navigation bar and choose 'Alexa Skill Kit' to access your Alexa Skills:

Let's create a new Skill by clicking on the blue button to the upper right:

The next step is to give the Skill a name:

Alexa is available in different countries and languages, like the US, UK, and Germany. A Skill can have more than one language (although you have to configure all the following steps again). Make sure to use the language that is also associated to the Amazon account that is linked to your Alexa enabled device, so you can test it without any problems. In our case, it will be English (U.S.) for the United States.

After this step, choose Custom as Skill model:

In the following steps, we will create a language model that works with Alexa.

Create a Language Model

After successfully creating the Skill, the screen looks like this:

As you can see in the left sidebar, an Interaction Model consists of an Invocation, Intents, and Slot Types.

But first, let's take a look at how natural language understanding (NLU) with Alexa works.

An Introduction to Alexa Interaction Models

Alexa helps you with several steps in processing input. First, it takes a user's speech and transforms it into written text (speech to text). Afterward, it uses a language model to make sense out of what the user means (natural language understanding).

A simple interaction model for Alexa consists of the following elements: Invocation, intents, utterances, and slots.

Invocation

There are two types of names for your Alexa Skill: While the first, the Name is the one people can see in their Alexa app and the Alexa Skill Store, the Invocation Name is the one that is used by your users to access your Skill:

Intents

An intent is something a user wants to achieve while talking to your product. It is the basic meaning that can be stripped away from the sentence or phrase the user is telling you. And there can be several ways to end up at that specific intent.

For example, a FindRestaurantIntent from the image above could have different ways how users could express it. In the case of Alexa language models, these are called utterances:

Utterances

An utterance (sometimes called user expression) is the actual sentence a user is saying. There are often a large variety of utterances that fit into the same intent. And sometimes it can even be a little more variable. This is when slots come into play:

Slots

No matter if I'm looking for a super cheap place, a pizza spot that serves Pabst Blue Ribbon, or a dinner restaurant to bring a date, generally speaking it serves one purpose (user intent): to find a restaurant. However, the user is passing some more specific information that can be used for a better user experience. These are called slots:

Choose an Invocation Name

Make sure to choose an invocation name that can be understood by Alexa. For this simple tutorial, we can just go with HelloWorld (name, done during Skill creation) and hello world (invocation name):

Create a HelloWorldIntent

For our simple voice app we only need to create two intents and add a few sample utterances, as well as a slot. So let's dive into the Amazon Developer Console and do this.

Click on the '+ Add' button to add our 'HelloWorldIntent':

And add the following example phrases to the 'Sample Utterances':

Create the 'MyNameIsIntent' next with the following utterances:

After you did that, you will see that the console automatically added an intent slot called 'name', but we still have to assign a slot type, so our Skill knows what kind of input it should execpt. In our case it's 'AMAZON.US_FIRST_NAME':

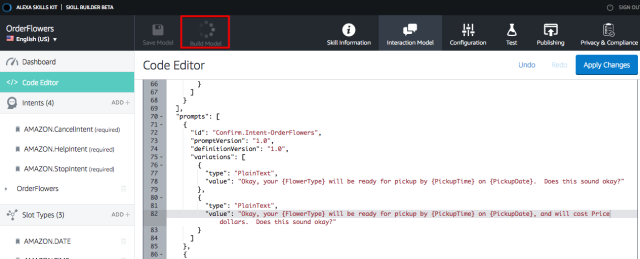

That's all we need. Now click on the 'Build Model' button on the top:

Build Your Skill's Code

Now let's build the logic of our Alexa Skill.

We're going to use our Jovo Framework which works for both Alexa Skills and Actions on Google Home.

Install the Jovo CLI

The Jovo Command Line Tools (see the GitHub repository) offer a great starting point for your voice application, as it makes it easy to create new projects from templates.

This should be downloaded and installed now (see our documentation for more information like technical requirements). After the installation, you can test if everything worked with the following command, which should return the current version of the CLI:

Create a new Project

Let's create a new project. You can see from the feature above that it's possible to create new projects with this command (the 'helloworld' template is the default template and will clone our Jovo Sample App into the specified directory):

A First Look at the Project

Let's take a look at the code provided by the sample application. This is what the folder structure looks like:

For now, you only have to touch the app.js file in the src folder. This is where all the configurations and app logic will happen. Learn more about the Jovo Project Structure here.

Let's take a look at the App Logic first:

Understanding the App Logic

The setHandler method is where you will spend most of your time when you're building the logic behind your Alexa Skill. It already has a 'HelloWorldIntent' and a 'MyNameIsIntent', as you can see below:

What's happening here? When your skill is opened, it triggers the LAUNCH-intent, which contains a toIntent call to switch to the HelloWorldIntent. The ask method is used to ask a user for a name.

If a user responds with a name, the MyNameIsIntent is triggered. Here, the tell method is called to respond to your users with a 'Nice to meet you!'

Where to Run Your Code

So where do we send the response to? Let's switch tabs once again and take a look at the Amazon Developer Console, this time the Endpoint section:

To make a connection between the Alexa API and your application, you need to either upload your code to AWS Lambda, or provide an HTTPS endpoint (a webhook).

Jovo supports both. For local prototyping and debugging, we recommend using HTTPS (which we are going to describe in the next step), but you can also jump to the Lambda section.

Local Prototyping with the Jovo Webhook

The index.js comes with off-the-shelf server support so that you can start developing locally as easy as possible.

Let's try that out with the following command (make sure to go into the project directory first):

This will start the express server and look like this:

As you can see above, Jovo is automatically creating a link to your local server: the Jovo Webhook. Paste the link into the field of the Amazon Developer Console and choose the second option for the SSL Certificate (the link Jovo webhook provides you is a secure subdomain):

Alexa Slot Value Synonyms Dictionary

Great! Your voice app is now running locally and ready to test. If you're interested in how to set up Lambda, read further. If you want to dive right into the testing, jump to 'Hello World!'.

Host your Code on AWS Lambda

AWS Lambda is a serverless hosting solution by Amazon. Many Skills are hosted on this platform, as it is a cheap alternative to other hosting providers, and also Amazon offers additional credits for Alexa Skill developers. In this section, you'll learn how to host your Skill on Lambda. This usually takes a few steps, so be prepared. If you only want to get an output for the first time, go back up to Local Prototyping.

In the next steps, we are going to create a new Lambda function on the AWS Developer Console.

Create a Lambda Function

Go to the AWS Management Console:

Search for 'lambda' or go directly to console.aws.amazon.com/lambda:

Click 'Create a Lambda function', choose 'Author from scratch' and fill out the form:

You can either choose an existing role (if you have one already), or create a new one. We're going to create one from a template and call it 'myNewRole' with no special policy templates.

Now it's time to configure your Lambda function. Let's start by adding the Alexa Skills Kit as a trigger:

You can enable skill ID verification, if you want, but it's not neccessary.

Upload Your Code

Now let's get to the fun part. You can either enter to code inline, upload a zip, or upload a file from Amazon S3. As we're using other dependencies like the jovo-framework npm package, we can't use the inline editor. We're going to zip our project and upload it to the function.

To create a zip file that is ready to upload, run the following command:

This will create an optimizeds bundle.zip file into your project directory, which includes all necessary dependencies.

Let's go back to the AWS Developer Console and upload the zip:

Now save your changes with the orange button in the upper right corner:

Test Your Lambda Function

Great! Your Lambda function is now created. Click 'Test' right next to the 'Save' button and select 'Alexa Start Session' as the event template:

Click 'Test,' aaand 🎉 it works!

Add ARN to Alexa Skill Configuration

Copy the ARN at the upper right corner:

Then go to the Configuration step of your Alexa Skill in the Amazon Developer Console and enter it:

Great! Now it's time to test your Skill:

Hello World

Go to 'Test' and enable your Skill for testing:

Wanna get your first 'Hello World!'? You can do this by either using the Service Simulator by Alexa, test on your device, or on your phone.

Test Your Skill in the Service Simulator

To use the simulator simply invoke your Skill:

This will create a JSON request and test it with your Skill. And if you look to the right: TADA 🎉! There is your response with 'Hello World!' as output speech.

Test Your Skill on an Alexa Enabled Device

Once the Skill is enabled to test, you can use a device like Amazon Echo or Echo Dot (which is associated with the same email address you used for the developer account) to test your Skill:

Test Your Skill on Your Phone

Don't have an Echo or Echo Dot handy, but still want to listen to Alexa's voice while testing your Skill? You can use the Alexa App on iOS and Android.

Next Steps

Great job! You've gone through all the necessary steps to prototype your own Alexa Skill. The next challenge is to build a real Skill. For this, take a look at the Jovo Documentation to see what else you can do with our Framework.

Recently I published my first skill for Amazon’s Alexa voice service called, BART Control. This skill used a variety of technologies and public APIs to become useful. In specific, I developed the skill with Node.js and the AWS Lambda service. However, what I mentioned is only a high level of what was done to make the Amazon Alexa skill possible. What must be done to get a functional skill that works on Amazon Alexa powered devices?

We’re going to see how to create a simple Amazon Alexa skill using Node.js and Lambda that works on various Alexa powered devices such as the Amazon Echo.

To be clear, I will not be showing you how to create the BART Control skill that I released as it is closed source. Instead we’ll be working on an even simple project, just to get your feet wet. We’ll explore more complicated skills in the future. The skill we’ll create will tell us something interesting upon request.

The Requirements

While we don’t need an Amazon Alexa powered device such as an Amazon Echo to make this tutorial a success, it certainly is a nice to have. My Amazon Echo is great!

Here are the few requirements that you must satisfy before continuing:

- Node.js 4.0 or higher

- An AWS account

Lambda is a part of AWS. While you don’t need Lambda to create a skill, Amazon has made it very convenient to use for this purpose. There is a free tier to AWS Lambda, so it should be relatively cheap, if not free. Lambda supports a variety of languages, but we’ll be using Node.js.

Building a Simple Node.js Amazon Alexa Skill

We need to create a new Node.js project. As a Node.js developer, you’re probably most familiar with Express Framework as it is a common choice amongst developers. Lambda does not use Express, but instead its own design.

Before we get too far ahead of ourselves, create a new directory somewhere on your computer. I’m calling mine, alexa-skill-the-polyglot and putting it on my desktop. Inside this project directory we need to create the following directories and files:

If your Command Prompt (Windows) or Terminal (Mac and Linux) doesn’t have the mkdir and touch commands, go ahead and create them manually.

We can’t start developing the Alexa skill yet. First we need to download the Alexa SDK for Node.js. To do this, execute the following form your Terminal or Command Prompt:

The SDK makes development incredibly easy in comparison to what it was previously.

Before we start coding the handler file for our Lambda function, let’s come up with a dataset to be used. Inside the src/data.js file, add the following:

The above dataset is very simple. We will have two different scenarios. If the user asks about Java we have two possible responses. If the user asks about Ionic Framework, we have three possible responses. The goal here is to randomize these responses based on the technology the user requests information about.

Now we can take a look at the core logic file.

Open the project’s src/index.js file and include the following code. Don’t worry, we’re going to break everything down after.

So what exactly is happening in the above code?

The first thing we’re doing is including the Alexa Skill Kit and the dataset that we plan to use within our application. The handlers is where all the magic happens.

Alexa has a few different lifecycle events. You can manage when a session is started, when a session ends, and when the skill is launched. The LaunchRequest event is when a skill is specifically opened. For example:

Alexa, open The Polyglot

The above command will open the skill The Polyglot and trigger the LaunchRequest event. The other lifecycle events trigger based on usage. For example, a session may not end immediately. There are scenarios where Alexa may ask for more information and keep the session open until a response is given.

In any case, our simple skill will not make use of the session events.

In our LaunchRequest event, we tell the user what they can do and how they can get help. The ask function will keep the session open until a response is given. Based on the request given, a different set of commands will be executed further down in our code.

This brings us to the other handlers in our code.

If you’re not too familiar with how Lambda does things, you have intents that perform actions. You can have as many as you want, but they are triggered based on what Alexa detects in your phrases.

For example:

If the AboutIntent is triggered, Alexa will respond with a card in the mobile application as well as spoken answers. We don’t know what triggers the AboutIntent yet, but we know that is a possible option.

The intent above is a bit more complicated. In the above intent we are expecting a parameter to be passed. These parameters are known as slot values and they are more variable to the user’s request.

If the user provides java as the parameter, the randomization function will get a Java response from our data file. This applies for ionic framework as well. If neither were used, we will default with some kind of error response.

In a production scenario you probably want to do a little better than just have two possible parameter options. For example, what if the user says ionic rather than ionic framework? Or what happens if Alexa interprets the user input as tonic rather than ionic? These are scenarios that you have to account for.

This brings us to the handler function that Lambda uses:

In this function we initialize everything. We define the application id, register all the handlers we just created, and execute them. Lambda recognizes this handler function as index.handler because our file is called index.js.

Let’s come up with those phrases that can trigger the intents in our application.

Creating a List of Sample Phrases Called Utterances

Amazon recommends you have as many phrases as you can possibly think up. During the deployment process to the Alexa Skill Store and AWS Lambda, you’ll need an utterances list.

For our example project, we might have the following utterances for the AboutIntent that we had created:

I listed four possible phrases, but I’m sure you can imagine that there are so many more possibilities. Try to think of everything the user might ask in order to trigger the AboutIntent code.

Things are a bit different when it comes to our other intent, LanguageIntent because there is an optional parameter. Utterances for this intent might look like the following:

Notice my use of the {Language} placeholder. Alexa will fill in the gap and treat whatever falls into that slot as a parameter. That same parameter name will be used in the JavaScript code.

Again, it is very important to come up with every possible phrase. Not coming up with enough phrase possibilities will leave a poor user experience.

Can you believe the development portion is done? Now we can focus on the deployment of our Amazon Alexa skill.

Deploying the Skill to AWS Lambda

Deployment is a two part process. Not only do we need to deploy to AWS Lambda, but we also need to deploy to the Amazon Alexa Skill store.

Starting with the AWS Lambda portion, log into your AWS account and choose to Lambda.

At this point we work towards creating a new Lambda function. As of right now, it is important that you choose Northern Virginia as your instance location. It is the only location that supports Alexa with Lambda. With that said, let’s go through the process.

Choose Create a Lambda function, but don’t select a blueprint from the list. Go ahead and continue. Choose Alexa Skills Kit as your Lambda trigger and proceed ahead.

On the next screen you’ll want to give your function a name and select to upload a ZIP archive of the project. The ZIP that you upload should only contain the two files that we created as well as the node_modules directory and nothing else. We also want to define the role to lambda_basic_execution.

Alexa Slot Value Synonyms Thesaurus

After you upload we can proceed to linking the Lambda function to an Alexa skill.

To create an Alexa skill you’ll want to log into the Amazon Developer Dashboard, which is not part of AWS. It is part of the Amazon App Store for mobile applications.

Once you are signed in, you’ll want to select Alexa from the tab list followed by Alexa Skills Kit.

From this area you’ll want to choose Add a New Skill and start the process. On the Skill Information page you’ll be able to obtain an application id after you save. This is the id that should be used in your application code.

In the Interaction Model we need to define an intent schema. For our sample application, it will look something like this:

The slot type for our LanguageIntent is a list that we must define. Create a custom slot type and include the following possible entries:

Go ahead and paste the sample utterances that we had created previously.

In the Configuration section, use the ARN from the Lambda function that we created. It can be found in the AWS dashboard. Go ahead and fill out all other sections to the best of your ability.

Conclusion

You just saw how to create a very simple skill for Amazon’s Alexa voice assistant. While our skill only had two possible intent actions, one of the intents had parameters while the other did not. This skill was developed with Node.js and hosted on AWS Lambda. The user is able to ask about the author of the skill as well as information about Java or Ionic Framework.

A video version of this article can be seen below.

Nic Raboy

Nic Raboy is an advocate of modern web and mobile development technologies. He has experience in Java, JavaScript, Golang and a variety of frameworks such as Angular, NativeScript, and Apache Cordova. Nic writes about his development experiences related to making web and mobile development easier to understand.